Latest YouTube Video

Saturday, January 14, 2017

Text the Police

from Google Alert - anonymous http://ift.tt/2jcndyl

via IFTTT

Student Faces 10 Years In Prison For Creating And Selling Limitless Keylogger

from The Hacker News http://ift.tt/2irHUnP

via IFTTT

Support anonymous user

from Google Alert - anonymous http://ift.tt/2jIt911

via IFTTT

Explained — What's Up With the WhatsApp 'Backdoor' Story? Feature or Bug!

from The Hacker News http://ift.tt/2jjgEMa

via IFTTT

Offering to maintain Anonymous login module

from Google Alert - anonymous http://ift.tt/2jatAlz

via IFTTT

Exploring Earth's Ionosphere: Limb view with approach

from NASA's Scientific Visualization Studio: Most Recent Items http://ift.tt/2iuKGfq

via IFTTT

Exploring Earth's Ionosphere: Limb view

from NASA's Scientific Visualization Studio: Most Recent Items http://ift.tt/2jtKBXP

via IFTTT

Friday, January 13, 2017

Anonymous squeals on Wikileaks & Julian Assange

from Google Alert - anonymous http://ift.tt/2jGGWF4

via IFTTT

Orioles and P Chris Tillman agree to one-year, $10.5 million deal to avoid arbitration; 16-6 with 3.77 ERA in 2016 (ESPN)

via IFTTT

Anonymous Publish Events

from Google Alert - anonymous http://ift.tt/2iucyQR

via IFTTT

anonymous $5000 donation vaults fundraiser for injured food cart vendor closer to goal

from Google Alert - anonymous http://ift.tt/2ilWOkn

via IFTTT

Ravens: Former president David Modell, 55, dies after battle with lung cancer; son of late owner Art Modell (ESPN)

via IFTTT

I have a new follower on Twitter

MLB Roundup

Here to enjoy the ride of @MLB with everyone! #MLB #FantasyBaseball

Following: 6666 - Followers: 35835

January 13, 2017 at 03:02PM via Twitter http://twitter.com/MLB_Roundup

Orioles and 3B Manny Machado agree to one-year, $11.5M deal to avoid arbitration - reports; .294 with 37 HR in 2016 (ESPN)

via IFTTT

Orioles and P Zach Britton agree to one-year, $11.4M deal to avoid arbitration - Crasnick; 0.54 ERA and 47 saves in 2016 (ESPN)

via IFTTT

Table of Contents: Deep Learning for Computer Vision with Python

A couple of days ago I mentioned that on Wednesday, January 18th at 10AM EST I am launching a Kickstarter to fund my new book — Deep Learning for Computer Vision with Python.

As you’ll see later in this post, there is a huge amount of content I’ll be covering, so I’ve decided to break the book down into three volumes called “bundles”.

A bundle includes the eBook, video tutorials, and source code for a given volume.

Each bundle builds on top of the others and includes all content from lower bundles. You should choose a bundle based on how in-depth you want to study deep learning and computer vision:

- Starter Bundle: A great fit for those taking their first steps toward deep learning for image classification mastery.

- Practitioner Bundle: Perfect for readers who are ready to study deep learning in-depth, understand advanced techniques, and discover common best practices and rules of thumb.

- ImageNet Bundle: The complete deep learning for computer vision experience. In this bundle, I demonstrate how to train large-scale neural networks from scratch on the massive ImageNet dataset. You can’t beat this bundle.

The complete Table of Contents for each bundle is listed in the next section.

Starter Bundle

The Starter Bundle includes the following topics:

Machine Learning Basics

Take the first step:

- Learn how to set up and configure your development environment to study deep learning.

- Understand image basics, including coordinate systems; width, height, depth; and aspect ratios.

- Review popular image datasets used to benchmark machine learning, deep learning, and Convolutional Neural Network algorithms.

Form a solid understanding of machine learning basics, including:

- The simple k-NN classifier.

- Parameterized learning (i.e., “learning from data”)

- Data and feature vectors.

- Understanding scoring functions.

- How loss functions work.

- Defining weight matrices and bias vectors (and how they facilitate learning).

Study basic optimization methods (i.e., how “learning” is actually done) via:

- Gradient Descent

- Stochastic Gradient Descent

- Batched Stochastic Gradient Descent

Fundamentals of Neural Networks

Discover feedforward network architectures:

- Implement the classic Perceptron algorithm by hand.

- Use the Perceptron algorithm to learn actual functions (and understand the limitations of the Perceptron algorithm).

- Take an in-depth dive into the Backpropagation algorithm.

- Implement Backpropagation by hand using Python + NumPy.

- Utilize a worksheet to help you practice the Backpropagation algorithm.

- Grasp multi-layer networks (and train them from scratch).

- Implement neural networks both by hand and with the Keras library.

Introduction to Convolutional Neural Networks

Start with the basics of convolutions:

- Understand convolutions (and why they are so much easier to grasp than they seem).

- Study Convolutional Neural Networks (what they are used for, why they work so well for image classification, etc.).

- Train your first Convolutional Neural Network from scratch.

Review the building blocks of Convolutional Neural Networks, including:

- Convolutional layers

- Activation layers

- Pooling layers

- Batch Normalization

- Dropout

Uncover common architectures and training patterns:

- Discover common network architecture patterns you can use to design architectures of your own with minimal frustration and headaches.

- Utilize out-of-the-box CNNs for classification that are pre-trained and ready to be applied to your own images/image datasets (VGG16, VGG19, ResNet50, etc.)

- Save and load your own network models from disk.

- Checkpoint your models to spot high performing epochs and restart training.

- Learn how to spot underfitting and overfitting, allowing you to correct for them and improve your classification accuracy.

- Utilize decay and learning rate schedulers.

- Train the classic LeNet architecture from scratch to recognize handwritten digits.

Work With Your Own Custom Datasets

Working with your custom datasets + deep learning is easy:

- Learn how to gather your own training images.

- Discover how to annotate and label your dataset.

- Train a Convolutional Neural Network from scratch on top of your dataset.

- Evaluate the accuracy of your model.

- …all of this explained by demonstrating how to gather, annotate, and train a CNN to break image captchas.

Practitioner Bundle

The Practitioner Bundle includes everything in the Starter Bundle. It also includes the following topics.

Advanced Convolutional Neural Networks

Discover how to use transfer learning to:

- Treat pre-trained networks as feature extractors to obtain high classification accuracy with little effort.

- Utilize fine-tuning to boost the accuracy of pre-trained networks.

- Apply data augmentation to increase network classification accuracy without gathering more training data.

Work with deeper network architectures:

- Code the seminal AlexNet architecture.

- Implement the VGGNet architecture (and variants of).

Explore more advanced optimization algorithms, including:

- RMSprop

- Adagrad

- Adadelta

- Adam

- Adamax

- Nadam

- …and best practices to fine-tune SGD parameters.

Best Practices to Boost Network Performance

Uncover common techniques & best practices to improve classification accuracy:

- Understand rank-1 and rank-5 accuracy (and how we use them to measure the classification power of a given network).

- Utilize image cropping for an easy way to boost accuracy on your test set.

- Explore how network ensembles can be used to increase classification accuracy simply by training multiple networks.

- Discover my optimal pathway for applying deep learning techniques to maximize classification accuracy (and which order to apply these techniques in to achieve greatest effectiveness).

Scaling to Large Image Datasets

Work with datasets too large to fit into memory:

- Learn how to convert an image dataset from raw images on disk to HDF5 format, making networks easier (and faster) to train.

- Compress large image datasets into efficiently packed record files.

Compete in deep learning challenges and competitions:

- Compete in Stanford’s cs231n Tiny ImageNet classification challenge…and take home the #1 position.

- Train a network on the Kaggle Dogs vs. Cats challenge and claim a position in the top-25 leaderboard with minimal effort.

Object Detection and Localization

Detect objects in images using deep learning by:

- Utilizing naive image pyramids and sliding windows for object detection.

- Training your own YOLO detector for recognizing objects in images/video streams in real-time.

ImageNet Bundle

The ImageNet Bundle includes everything in the Starter Bundle and Practitioner Bundle. It also includes the following additional topics:

ImageNet: Large Scale Visual Recognition Challenge

Train state-of-the-art networks on the ImageNet dataset:

- Discover what the massive ImageNet (1,000 category) dataset is and why it’s considered the de-facto challenge to benchmark image classification algorithms.

- Obtain the ImageNet dataset.

- Convert ImageNet into a format suitable for training.

- Learn how to utilize multiple GPUs to train your network in parallel, greatly reducing training time.

- Train AlexNet on ImageNet from scratch.

- Train VGGNet from the ground-up on ImageNet.

- Apply the SqueezeNet architecture to ImageNet to obtain a (high accuracy) model, fully deployable to smaller devices, such as the Raspberry Pi.

ImageNet: Tips, Tricks, and Rules of Thumb

Unlock the same techniques deep learning pros use on ImageNet:

- Save weeks (and even months) of training time by discovering learning rate schedules that actually work.

- Spot overfitting on ImageNet and catch it before you waste hours (or days) watching your validation accuracy stall.

- Learn how to restart training from saved epochs, lower learning rates, and boost accuracy.

- Uncover methods to quickly tune hyperparameters to massive networks.

Case Studies

Discover how to solve real-world deep learning problems, including:

- Train a network to predict the gender and age of people in images using deep learning techniques.

- Automatically classify car types using Convolutional Neural Networks.

- Determine (and correct) image orientation using CNNs.

So there you have it — the complete Table of Contents for Deep Learning for Computer Vision with Python. I hope after looking over this list you’re as excited as I am!

I also have some secret bonus chapters that I’m keeping under wraps until the Kickstarter officially launches — stay tuned for more details.

To be notified when more Kickstarter announcements go live (including ones I won’t be publishing on this blog), be sure to signup for the Kickstarter notification list!

The post Table of Contents: Deep Learning for Computer Vision with Python appeared first on PyImageSearch.

from PyImageSearch http://ift.tt/2iQ6OP1

via IFTTT

WhatsApp Backdoor allows Hackers to Intercept and Read Your Encrypted Messages

from The Hacker News http://ift.tt/2ikRZHY

via IFTTT

Anonymous Review: Wait for Me

from Google Alert - anonymous http://ift.tt/2j8gjdr

via IFTTT

Orioles: IF Ryan Flaherty ($1.8M) and P T.J. McFarland ($685,000) agree to one-year deals to avoid arbitration - reports (ESPN)

via IFTTT

ISS Daily Summary Report – 1/12/2017

from ISS On-Orbit Status Report http://ift.tt/2jrjcWv

via IFTTT

[FD] Nginx (Debian-based + Gentoo distros) - Root Privilege Escalation [CVE-2016-1247 UPDATE]

Source: Gmail -> IFTTT-> Blogger

Donald Trump appoints a CyberSecurity Advisor Whose Own Site is Damn Vulnerable

from The Hacker News http://ift.tt/2ijyJuv

via IFTTT

dotnet/roslyn

from Google Alert - anonymous http://ift.tt/2iqEyEQ

via IFTTT

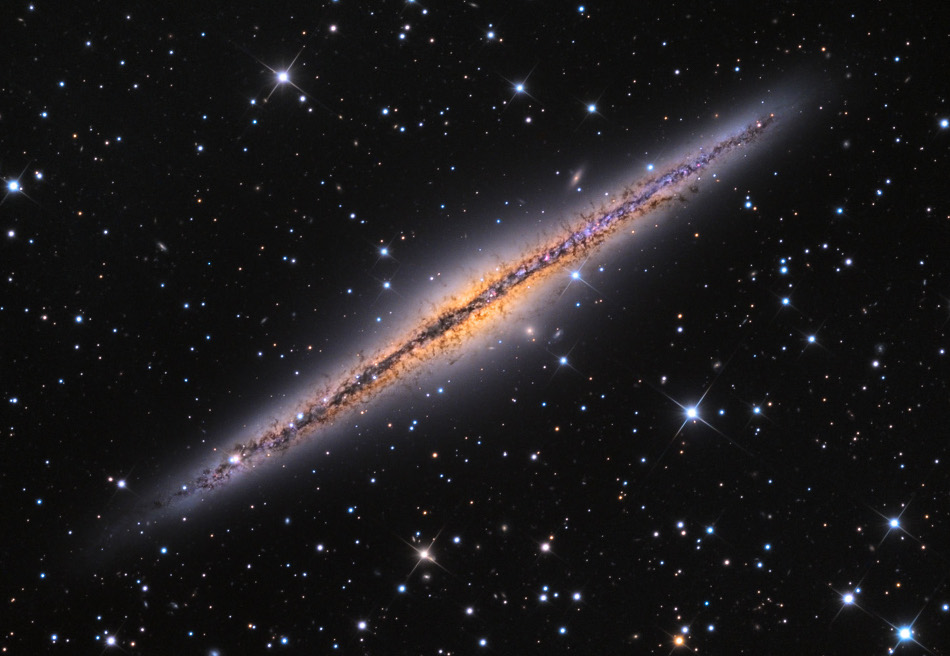

Edge On NGC 891

Ye Olde Tyme Heliophysics Map

from NASA's Scientific Visualization Studio: Most Recent Items http://ift.tt/2jdHA0r

via IFTTT

Thursday, January 12, 2017

Anonymous image board by state

from Google Alert - anonymous http://ift.tt/2iiYRWs

via IFTTT

Proxy server anonymous ip address

from Google Alert - anonymous http://ift.tt/2jC08nC

via IFTTT

Assertional Logic: Towards an Extensible Knowledge Model (extended abstract). (arXiv:1701.03322v1 [cs.AI])

We argue that extensibility is a key challenge for knowledge representation. For this purpose, we propose assertional logic - a knowledge model for easier extension with new AI building blocks. In assertional logic, all syntactic objects are categorized as set theoretic constructs including individuals, concepts and operators, and all kinds of knowledge are formalized by equality assertions. When extending with a new building block, one only needs to consider its interactions with the basic form of knowledge (i.e., equality assertions) without going deeper into its interactions with other existing ones. We first present a primitive form of assertional logic that uses minimal assumed knowledge and constructs. Then, we show how to extend it by definitions, which are special kinds of knowledge, i.e., assertions. As a case study, we show how assertional logic can be used to unify logic and probability, and more important AI building blocks including time.

from cs.AI updates on arXiv.org http://ift.tt/2iq8YqY

via IFTTT

Residual LSTM: Design of a Deep Recurrent Architecture for Distant Speech Recognition. (arXiv:1701.03360v1 [cs.LG])

In this paper, a novel architecture for a deep recurrent neural network, residual LSTM is introduced. A plain LSTM has an internal memory cell that can learn long term dependencies of sequential data. It also provides a temporal shortcut path to avoid vanishing or exploding gradients in the temporal domain. The proposed residual LSTM architecture provides an additional spatial shortcut path from lower layers for efficient training of deep networks with multiple LSTM layers. Compared with the previous work, highway LSTM, residual LSTM reuses the output projection matrix and the output gate of LSTM to control the spatial information flow instead of additional gate networks, which effectively reduces more than 10% of network parameters. An experiment for distant speech recognition on the AMI SDM corpus indicates that the performance of plain and highway LSTM networks degrades with increasing network depth. For example, 10-layer plain and highway LSTM networks showed 13.7% and 6.2% increase in WER over 3-layer baselines, respectively. On the contrary, 10-layer residual LSTM networks provided the lowest WER 41.0%, which corresponds to 3.3% and 2.8% WER reduction over 3-layer plain and highway LSTM networks, respectively. Training with both the IHM and SDM corpora, the residual LSTM architecture provided larger gain from increasing depth: a 10-layer residual LSTM showed 3.0% WER reduction over the corresponding 5-layer one.

from cs.AI updates on arXiv.org http://ift.tt/2jBW6vq

via IFTTT

Improving Sampling from Generative Autoencoders with Markov Chains. (arXiv:1610.09296v3 [cs.LG] UPDATED)

We focus on generative autoencoders, such as variational or adversarial autoencoders, which jointly learn a generative model alongside an inference model. Generative autoencoders are those which are trained to softly enforce a prior on the latent distribution learned by the inference model. We call the distribution to which the inference model maps observed samples, the learned latent distribution, which may not be consistent with the prior. We formulate a Markov chain Monte Carlo (MCMC) sampling process, equivalent to iteratively decoding and encoding, which allows us to sample from the learned latent distribution. Since, the generative model learns to map from the learned latent distribution, rather than the prior, we may use MCMC to improve the quality of samples drawn from the generative model, especially when the learned latent distribution is far from the prior. Using MCMC sampling, we are able to reveal previously unseen differences between generative autoencoders trained either with or without a denoising criterion.

from cs.AI updates on arXiv.org http://ift.tt/2e2Cspa

via IFTTT

Tuning Recurrent Neural Networks with Reinforcement Learning. (arXiv:1611.02796v4 [cs.LG] UPDATED)

The approach of training sequence models using supervised learning and next-step prediction suffers from known failure modes. For example, it is notoriously difficult to ensure multi-step generated sequences have coherent global structure. We propose a novel sequence-learning approach in which we use a pre-trained Recurrent Neural Network (RNN) to supply part of the reward value in a Reinforcement Learning (RL) model. Thus, we can refine a sequence predictor by optimizing for some imposed reward functions, while maintaining good predictive properties learned from data. We propose efficient ways to solve this by augmenting deep Q-learning with a cross-entropy reward and deriving novel off-policy methods for RNNs from KL control. We explore the usefulness of our approach in the context of music generation. An LSTM is trained on a large corpus of songs to predict the next note in a musical sequence. This Note RNN is then refined using our method and rules of music theory. We show that by combining maximum likelihood (ML) and RL in this way, we can not only produce more pleasing melodies, but significantly reduce unwanted behaviors and failure modes of the RNN, while maintaining information learned from data.

from cs.AI updates on arXiv.org http://ift.tt/2gcycJD

via IFTTT

[FD] nextcloud/owncloud user enumeration vulnerbility

Source: Gmail -> IFTTT-> Blogger

[FD] ICMPv6 PTBs and IPv6 frag filtering (particularly at BGP peers)

Source: Gmail -> IFTTT-> Blogger

[FD] Multiple vulnerabilities in cPanel <= 60.0.34

Source: Gmail -> IFTTT-> Blogger

How to anonymously view a private instagram account

from Google Alert - anonymous http://ift.tt/2ilWn4L

via IFTTT

Jeff Bezos is the anonymous buyer of the biggest house in Washington

from Google Alert - anonymous http://ift.tt/2jp5G5K

via IFTTT

Anonymous Woman Playwright Writes Solo Show About Her Sex Life, And Male Comedians ...

from Google Alert - anonymous http://ift.tt/2jBgk8r

via IFTTT

Anonymous Documentarists

from Google Alert - anonymous http://ift.tt/2ipx5G2

via IFTTT

Anonymous tip leads to drug bust in PA

from Google Alert - anonymous http://ift.tt/2ipKHRx

via IFTTT

Ravens give newly hired Greg Roman official title of senior assistant tight end coach, source tells Jamison Hensley (ESPN)

via IFTTT

Anonymous donor gives College of Saint Benedict $10 million

from Google Alert - anonymous http://ift.tt/2ikX26s

via IFTTT

propaganda minister

from Google Alert - anonymous http://ift.tt/2ip4jVZ

via IFTTT

All Addictions Anonymous Meetings

from Google Alert - anonymous http://ift.tt/2il18vv

via IFTTT

Phone-Hacking Firm Cellebrite Got Hacked; 900GB Of Data Stolen

from The Hacker News http://ift.tt/2iLCuoN

via IFTTT

Sneak Preview: Deep Learning for Computer Vision with Python

Wow, the Kickstarter launch date of January 18th is approaching so fast!

I still have a ton of work to do and I’m neck-deep in Kickstarter logistics, but I took a few minutes earlier today and recorded this sneak preview of Deep Learning for Computer Vision with Python just for you:

The video is fairly short at only 2m51s, and it’s absolutely worth the watch, but if you don’t have enough time to watch it, you can read the gist below:

- 0m09s: I show the output of training AlexNet from scratch on the massive ImageNet dataset — which I’ll be showing you exactly how to do inside my book.

- 0m37s: I discuss how this book has one goal: to help developers, researchers, and students just like yourself become experts in deep learning computer vision.

- 0m44s: Whether this is the first time you’ve worked with deep learning and neural networks or you’re already a seasoned deep learning practitioner, this book is engineered from the ground up to help you reach expert status.

- 0m58s: I provide a high level overview of the topics that will be covered inside my deep learning for computer vision book.

- 1m11s: I reveal the programming language (Python) and the libraries we’ll be using (Keras and mxnet) to build deep learning networks.

- 1m24s: Since we’ll be covering a massive amount of topics, I’ll be breaking the book down into volumes called “bundles”. You’ll be able to choose a bundle based on how in-depth you want to study deep learning, along with your particular budget.

- 1m38s: Each bundle will include the eBook files, video tutorials and walkthroughs, source code listings, access to the companion website, and a downloadable pre-configured Ubuntu VM.

- The Kickstarter campaign will be going live on Wednesday, January 18th at 10AM EST — I hope to see you on the Kickstarter backer list.

Like I said, if you have the time, the sneak preview is definitely worth the watch.

And I hope that you support the Deep Learning for Computer Vision with Python Kickstarter campaign on Wednesday, January 18th at 10AM EST — if you’re serious about becoming a deep learning expert, then this book will be the perfect fit for you!

To be notified when more Kickstarter announcements go live, be sure to signup for the Kickstarter notification list!

The post Sneak Preview: Deep Learning for Computer Vision with Python appeared first on PyImageSearch.

from PyImageSearch http://ift.tt/2jAwdMC

via IFTTT

Anonymous Man Icon

from Google Alert - anonymous http://ift.tt/2iLbDt2

via IFTTT

ISS Daily Summary Report – 1/11/2017

from ISS On-Orbit Status Report http://ift.tt/2j4E46b

via IFTTT

Ravens: Former Bills OC Greg Roman joining coaching staff, with official duties still to be determined - Adam Schefter (ESPN)

via IFTTT

I have a new follower on Twitter

Lars

Devoted to Business Transformation & Knowledge Management in the Era of Cloud and Big Data

Deutschland

https://t.co/ivnXUNovJN

Following: 160 - Followers: 147

January 12, 2017 at 03:12AM via Twitter http://twitter.com/NoggleOnline

I have a new follower on Twitter

Lesley Thomas

#Actress turned #Indie #Filmmaker working on first #Horror #Zombies #Shortfilm. Please #SupportIndieFilm

Los Angeles, CA

Following: 12326 - Followers: 13112

January 12, 2017 at 01:17AM via Twitter http://twitter.com/LLesleyThomas

Ravens: Owner Steve Bisciotti reiterates team will continue to avoid players with history of domestic violence (ESPN)

via IFTTT

Wednesday, January 11, 2017

I have a new follower on Twitter

Uma Levrone

Retired Model #Digital #Marketing #Consultant #Crowdfunding #Indiegogo #Kickstarter #Startups

Boston, MA

Following: 1880 - Followers: 4992

January 11, 2017 at 10:30PM via Twitter http://twitter.com/UmaLevrone

I have a new follower on Twitter

Evan Carroll

Author, Keynote Speaker and Trainer. Founder @high5conf and @AttendedEvents. Past President @AMATriangle. Alum of @Capstrat, @ChannelAdvisor and @uncsils.

Raleigh, NC

https://t.co/2LF6EpfTeT

Following: 13324 - Followers: 15503

January 11, 2017 at 08:41PM via Twitter http://twitter.com/evancarroll

I have a new follower on Twitter

Servant Leadership

Servant Leadership Implementation Experts

Carlsbad, California

https://t.co/iW7s1iQV1S

Following: 1625 - Followers: 2625

January 11, 2017 at 08:41PM via Twitter http://twitter.com/SLILead

OpenNMT: Open-Source Toolkit for Neural Machine Translation. (arXiv:1701.02810v1 [cs.CL])

We describe an open-source toolkit for neural machine translation (NMT). The toolkit prioritizes efficiency, modularity, and extensibility with the goal of supporting NMT research into model architectures, feature representations, and source modalities, while maintaining competitive performance and reasonable training requirements. The toolkit consists of modeling and translation support, as well as detailed pedagogical documentation about the underlying techniques.

from cs.AI updates on arXiv.org http://ift.tt/2ihYwOR

via IFTTT

Decoding as Continuous Optimization in Neural Machine Translation. (arXiv:1701.02854v1 [cs.CL])

In this work, we propose a novel decoding approach for neural machine translation (NMT) based on continuous optimisation. The resulting optimisation problem can then be tackled using a whole range of continuous optimisation algorithms which have been developed and used in the literature mainly for training. Our approach is general and can be applied to other sequence-to-sequence neural models as well. We make use of this powerful decoding approach to intersect an underlying NMT with a language model, to intersect left-to-right and right-to-left NMT models, and to decode with soft constraints involving coverage and fertility of the source sentence words. The experimental results show the promise of the proposed framework.

from cs.AI updates on arXiv.org http://ift.tt/2j8JFKP

via IFTTT

Context-aware Captions from Context-agnostic Supervision. (arXiv:1701.02870v1 [cs.CV])

We introduce a technique to produce discriminative context-aware image captions (captions that describe differences between images or visual concepts) using only generic context-agnostic training data (captions that describe a concept or an image in isolation). For example, given images and captions of "siamese cat" and "tiger cat", our system generates language that describes the "siamese cat" in a way that distinguishes it from "tiger cat". We start with a generic language model that is context-agnostic and add a listener to discriminate between closely-related concepts. Our approach offers two key advantages over previous work: 1) our listener does not need separate training, and 2) allows joint inference to decode sentences that satisfy both the speaker and listener -- yielding an introspective speaker. We first apply our introspective speaker to a justification task, i.e. to describe why an image contains a particular fine-grained category as opposed to another closely related category in the CUB-200-2011 dataset. We then study discriminative image captioning to generate language that uniquely refers to one out of two semantically similar images in the COCO dataset. Evaluations with discriminative ground truth for justification and human studies for discriminative image captioning reveal that our approach outperforms baseline generative and speaker-listener approaches for discrimination.

from cs.AI updates on arXiv.org http://ift.tt/2jlaNDF

via IFTTT

A Framework for Knowledge Management and Automated Reasoning Applied on Intelligent Transport Systems. (arXiv:1701.03000v1 [cs.AI])

Cyber-Physical Systems in general, and Intelligent Transport Systems (ITS) in particular use heterogeneous data sources combined with problem solving expertise in order to make critical decisions that may lead to some form of actions e.g., driver notifications, change of traffic light signals and braking to prevent an accident. Currently, a major part of the decision process is done by human domain experts, which is time-consuming, tedious and error-prone. Additionally, due to the intrinsic nature of knowledge possession this decision process cannot be easily replicated or reused. Therefore, there is a need for automating the reasoning processes by providing computational systems a formal representation of the domain knowledge and a set of methods to process that knowledge. In this paper, we propose a knowledge model that can be used to express both declarative knowledge about the systems' components, their relations and their current state, as well as procedural knowledge representing possible system behavior. In addition, we introduce a framework for knowledge management and automated reasoning (KMARF). The idea behind KMARF is to automatically select an appropriate problem solver based on formalized reasoning expertise in the knowledge base, and convert a problem definition to the corresponding format. This approach automates reasoning, thus reducing operational costs, and enables reusability of knowledge and methods across different domains. We illustrate the approach on a transportation planning use case.

from cs.AI updates on arXiv.org http://ift.tt/2j8Fgr6

via IFTTT

Towards Smart Proof Search for Isabelle. (arXiv:1701.03037v1 [cs.AI])

Despite the recent progress in automatic theorem provers, proof engineers are still suffering from the lack of powerful proof automation. In this position paper we first report our proof strategy language based on a meta-tool approach. Then, we propose an AI-based approach to drastically improve proof automation for Isabelle, while identifying three major challenges we plan to address for this objective.

from cs.AI updates on arXiv.org http://ift.tt/2jl6W9P

via IFTTT

Exploration: A Study of Count-Based Exploration for Deep Reinforcement Learning. (arXiv:1611.04717v2 [cs.AI] UPDATED)

Count-based exploration algorithms are known to perform near-optimally when used in conjunction with tabular reinforcement learning (RL) methods for solving small discrete Markov decision processes (MDPs). It is generally thought that count-based methods cannot be applied in high-dimensional state spaces, since most states will only occur once. Recent deep RL exploration strategies are able to deal with high-dimensional continuous state spaces through complex heuristics, often relying on optimism in the face of uncertainty or intrinsic motivation. In this work, we describe a surprising finding: a simple generalization of the classic count-based approach can reach near state-of-the-art performance on various high-dimensional and/or continuous deep RL benchmarks. States are mapped to hash codes, which allows to count their occurrences with a hash table. These counts are then used to compute a reward bonus according to the classic count-based exploration theory. We find that simple hash functions can achieve surprisingly good results on many challenging tasks. Furthermore, we show that a domain-dependent learned hash code may further improve these results. Detailed analysis reveals important aspects of a good hash function: 1) having appropriate granularity and 2) encoding information relevant to solving the MDP. This exploration strategy achieves near state-of-the-art performance on both continuous control tasks and Atari 2600 games, hence providing a simple yet powerful baseline for solving MDPs that require considerable exploration.

from cs.AI updates on arXiv.org http://ift.tt/2eYkVir

via IFTTT

Pose-Selective Max Pooling for Measuring Similarity. (arXiv:1609.07042v4 [cs.CV] CROSS LISTED)

In this paper, we deal with two challenges for measuring the similarity of the subject identities in practical video-based face recognition - the variation of the head pose in uncontrolled environments and the computational expense of processing videos. Since the frame-wise feature mean is unable to characterize the pose diversity among frames, we define and preserve the overall pose diversity and closeness in a video. Then, identity will be the only source of variation across videos since the pose varies even within a single video. Instead of simply using all the frames, we select those faces whose pose point is closest to the centroid of the K-means cluster containing that pose point. Then, we represent a video as a bag of frame-wise deep face features while the number of features has been reduced from hundreds to K. Since the video representation can well represent the identity, now we measure the subject similarity between two videos as the max correlation among all possible pairs in the two bags of features. On the official 5,000 video-pairs of the YouTube Face dataset for face verification, our algorithm achieves a comparable performance with VGG-face that averages over deep features of all frames. Other vision tasks can also benefit from the generic idea of employing geometric cues to improve the descriptiveness of deep features.

from cs.AI updates on arXiv.org http://ift.tt/2cVF35F

via IFTTT

I have a new follower on Twitter

Vizury

#GrowthMarketing platform that drives user retention and incremental conversions for #ecommerce, #BFSI and #travel brands.

Bangalore, India

http://t.co/Kud73Anu0Z

Following: 1102 - Followers: 2165

January 11, 2017 at 05:20PM via Twitter http://twitter.com/VizuryOneToOne

I have a new follower on Twitter

Ad Benchmark Index

ABX measures the advertising effectiveness of EVERY new ad across TV, radio, print, Internet, and out-of-home. Your ads. Your competitor's ads. All ads.

White Plains, New York

http://t.co/iOZLG12O1n

Following: 3165 - Followers: 3438

January 11, 2017 at 04:10PM via Twitter http://twitter.com/ABXindex

I have a new follower on Twitter

Jim Berkowitz

Founder of LaunchHawk | Startup Mentoring | Growth Consulting | Developer of the LaunchHawk "PinPoint" Program | Jazz DJ on KOTO-fm | Telluride CO

Telluride CO

https://t.co/IlG1GiTsCw

Following: 14674 - Followers: 18606

January 11, 2017 at 04:10PM via Twitter http://twitter.com/jberkowitz

My Deep Learning Kickstarter will go live on Wednesday, January 18th at 10AM EST

I’ve got some exciting news to share today!

My Deep Learning for Computer Vision with Python Kickstarter campaign is set to launch in exactly one week on Wednesday, January 18th at 10AM EST.

This book has only goal — to help developers, researchers, and students just like yourself become experts in deep learning for image recognition and classification.

Whether this is the first time you’ve worked with machine learning and neural, networks or you’re already a seasoned deep learning practitioner, Deep Learning for Computer Vision with Python is engineered from the ground up to help you reach expert status.

Inside this book you’ll find:

- Super practical walkthroughs that present solutions to actual, real-world image classification problems, challenges, and competitions.

- Hands-on tutorials (with lots of code) that not only show you the algorithms behind deep learning for computer vision, but their implementations as well.

- A no-bullshit teaching style that is guaranteed to cut through all the cruft and help you master deep learning for image understanding and visual recognition.

As a heads up, over the next 7 days I’ll be posting a few more announcements that you won’t want to miss, including:

Thursday, January 12th:

A sneak preview of the Kickstarter campaign, including a demo video of what you’ll find inside the book.

Friday, January 13th:

The Table of Contents for Deep Learning for Computer Vision with Python. This book is extensive, covering the basics of deep learning all the way up to training large-scale networks on the massive ImageNet dataset. You won’t want to miss this list!

Monday, January 16th:

The full list of Kickstarter rewards (including early bird discounts) so you can plan ahead for which reward you want when the Kickstarter launches.

I won’t be posting this list publicly — this reward list is only for PyImageSearch readers who are part of the PyImageSearch Newsletter.

Tuesday, January 17th:

Please keep in mind that this book is already getting a lot of attention, so there will be multiple people in line for each reward level when the Kickstarter campaign launches on Wednesday the 18th. To help ensure you get the reward you want, I’ll be sharing tips and tricks you can use to ensure you’re first in line.

Again, I won’t be posting this publicly either. Make sure you signup for the PyImageSearch Newsletter to receive these tips and tricks to ensure you’re at the front of the line.

Wednesday, January 18th:

The Kickstarter campaign link that you can use to claim your copy of Deep Learning for Computer Vision with Python.

To be notified when these announcements go live, be sure to signup for the Kickstarter notification list!

The post My Deep Learning Kickstarter will go live on Wednesday, January 18th at 10AM EST appeared first on PyImageSearch.

from PyImageSearch http://ift.tt/2jE8pLK

via IFTTT

Private temp files are still accessible to anonymous users.

from Google Alert - anonymous http://ift.tt/2idnJ1P

via IFTTT

The 'branding'

from Google Alert - anonymous http://ift.tt/2jvQy5O

via IFTTT

I have a new follower on Twitter

Ben Murray

CFO | Download my free #SaaS #Excel models at https://t.co/DV3V9Ob9dc | Join my SaaS #Metrics group below | #hockey #coffee #golf

Dubuque, IA

https://t.co/AEWG0Wutol

Following: 4603 - Followers: 5030

January 11, 2017 at 08:55AM via Twitter http://twitter.com/BR_Murray

ISS Daily Summary Report – 1/10/2017

from ISS On-Orbit Status Report http://ift.tt/2j626j8

via IFTTT

I have a new follower on Twitter

Eric Kimberling

Founder of Panorama Consulting, world's leading independent #ERP consulting firm: selection, implementation, #digitaltransformation, org change, expert witness.

Denver, Colorado

https://t.co/BiPgdOQZC7

Following: 2572 - Followers: 5493

January 11, 2017 at 08:25AM via Twitter http://twitter.com/erickimberling

Browser AutoFill Feature Can Leak Your Personal Information to Hackers

from The Hacker News http://ift.tt/2ij5KVY

via IFTTT

Secure Your Enterprise With Zoho Vault Password Management Software

from The Hacker News http://ift.tt/2iEYMZ7

via IFTTT

[FD] Cobi Tools v1.0.8 iOS - Persistent Web Vulnerability

ÿþL

ÿþL

Solution - Fix & Patch: ======================= The solution is to parse the devicename of the ios device within the email message body context. Disallow the usage of special chars for devicenames in the app to prevent local exploitation. Security Risk: ============== The security risk of the persistent input validation vulnerability in the cobi tools application is estimated as medium. (CVSS 3.5) Credits & Authors: ================== Vulnerability Laboratory [Research Team] - Benjamin Kunz Mejri (research@vulnerability-lab.com) [http://ift.tt/1TDrAB7.] Disclaimer & Information: ========================= The information provided in this advisory is provided as it is without any warranty. Vulnerability Lab disclaims all warranties, either expressed or implied, including the warranties of merchantability and capability for a particular purpose. Vulnerability-Lab or its suppliers are not liable in any case of damage, including direct, indirect, incidental, consequential loss of business profits or special damages, even if Vulnerability-Lab or its suppliers have been advised of the possibility of such damages. Some states do not allow the exclusion or limitation of liability mainly for consequential or incidental damages so the foregoing limitation may not apply. We do not approve or encourage anybody to break any licenses, policies, deface websites, hack into databases or trade with stolen data. Domains: http://ift.tt/1jnqRwA - www.vuln-lab.com - http://ift.tt/1kouTut Section: magazine.vulnerability-lab.com - http://ift.tt/1zNuo47 - http://ift.tt/1wo6y8x Social: twitter.com/vuln_lab - http://ift.tt/1kouSqa - http://youtube.com/user/vulnerability0lab Feeds: http://ift.tt/1iS1DH0 - http://ift.tt/1kouSqh - http://ift.tt/1kouTKS Programs: http://ift.tt/1iS1GCs - http://ift.tt/1iS1FyF - http://ift.tt/1oSBx0A Any modified copy or reproduction, including partially usages, of this file, resources or information requires authorization from Vulnerability Laboratory. Permission to electronically redistribute this alert in its unmodified form is granted. All other rights, including the use of other media, are reserved by Vulnerability-Lab Research Team or its suppliers. All pictures, texts, advisories, source code, videos and other information on this website is trademark of vulnerability-lab team & the specific authors or managers. To record, list, modify, use or edit our material contact (admin@) to get a ask permission. Copyright © 2017 | Vulnerability Laboratory - [Evolution Security GmbH]™

Source: Gmail -> IFTTT-> Blogger

[FD] Boxoft Wav v1.1.0.0 - Buffer Overflow Vulnerability

Source: Gmail -> IFTTT-> Blogger

[FD] Huawei Flybox B660 - (POST Reboot) CSRF Vulnerability

Source: Gmail -> IFTTT-> Blogger

[FD] Bit Defender #39 - Auth Token Bypass Vulnerability

Source: Gmail -> IFTTT-> Blogger