Latest YouTube Video

Saturday, November 11, 2017

anonymous

from Google Alert - anonymous http://ift.tt/2ySVcHc

via IFTTT

Anonymous legislation? Democratic leader says it's not a good idea

from Google Alert - anonymous http://ift.tt/2hwsDaR

via IFTTT

Mothers In Law Anonymous: A Place To Air Your Frustrations

from Google Alert - anonymous http://ift.tt/2AwMqec

via IFTTT

Anonymous in italian

from Google Alert - anonymous http://ift.tt/2AvktU8

via IFTTT

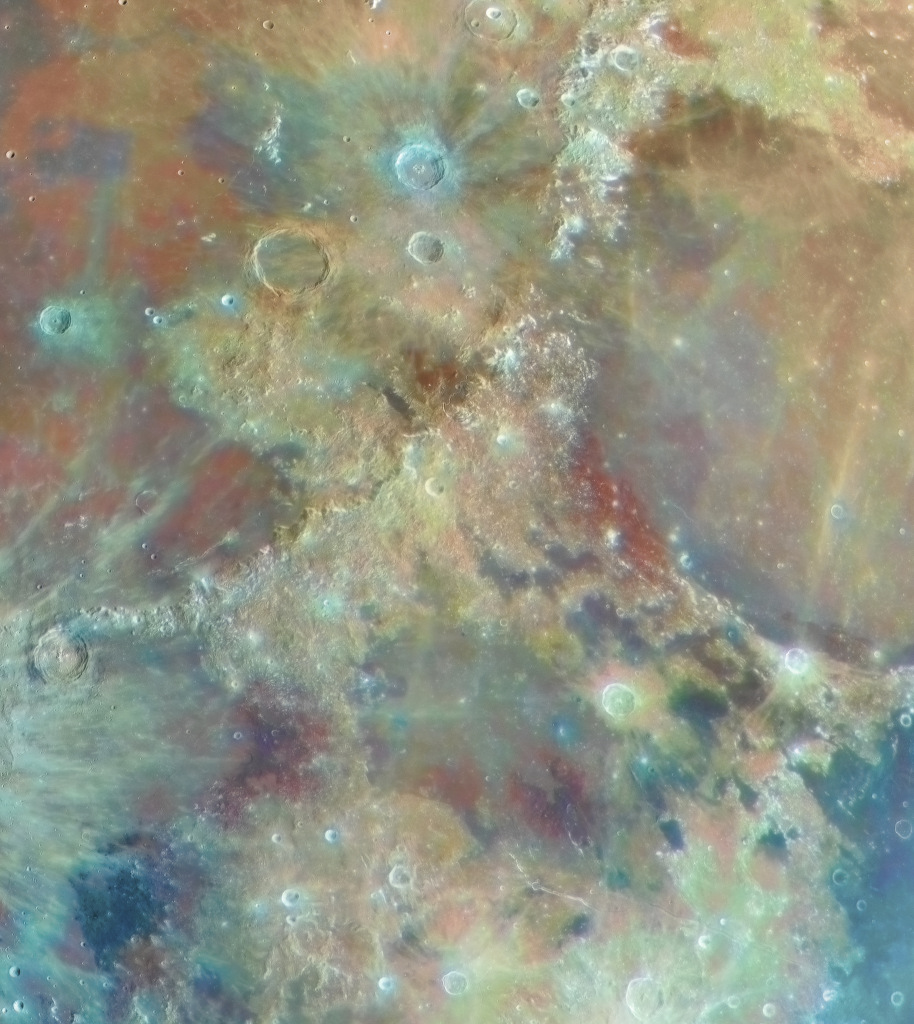

A Colourful Moon

Friday, November 10, 2017

Narcotics Anonymous

from Google Alert - anonymous http://ift.tt/2zt7kOp

via IFTTT

The Culture of Alcoholics Anonymous Perpetuates Sexual Abuse

from Google Alert - anonymous http://ift.tt/2jh1IQU

via IFTTT

AL East Offseason Preview: What big question is facing each team? (ESPN)

via IFTTT

Lit Manager & Producer at Anonymous Content Desires Assistant

from Google Alert - anonymous http://ift.tt/2yqniFi

via IFTTT

Appeals court rules Glassdoor must reveal identities of anonymous users

from Google Alert - anonymous http://ift.tt/2i0FcZd

via IFTTT

Landon Collins Backs Up Ben McAdoo In Wake Of Anonymous Ripping

from Google Alert - anonymous http://ift.tt/2AteVJx

via IFTTT

Judge rules company must hand over names of anonymous contributors

from Google Alert - anonymous http://ift.tt/2yOSYZj

via IFTTT

“Romantics Anonymous” Review of Shakespeare's Globe Theatre

from Google Alert - anonymous http://ift.tt/2iMf1ES

via IFTTT

Free anonymous proxy server list usa

from Google Alert - anonymous http://ift.tt/2zxDUMC

via IFTTT

Anonymous Donation Honors Veterans at Memorial Park

from Google Alert - anonymous http://ift.tt/2ynFcZe

via IFTTT

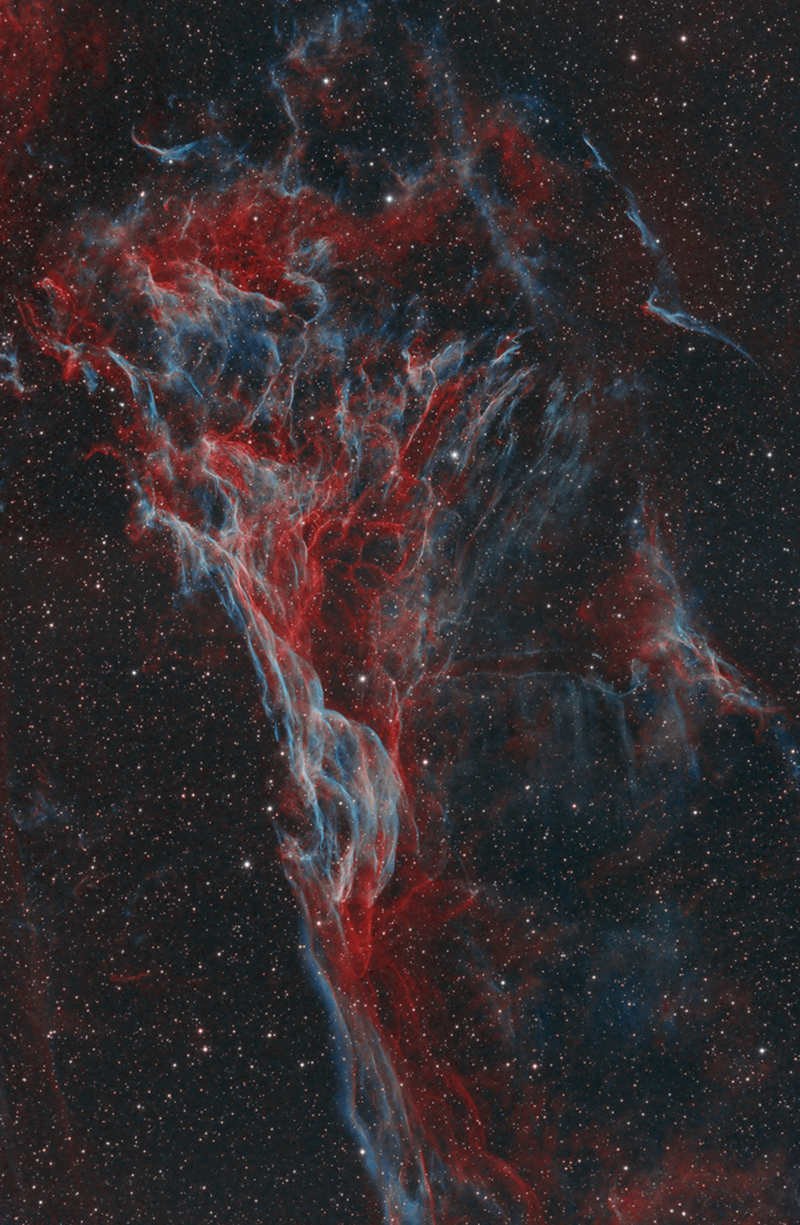

Williamina Fleming s Triangular Wisp

Thursday, November 9, 2017

Players upset with anonymous report ripping McAdoo

from Google Alert - anonymous http://ift.tt/2zvDQgo

via IFTTT

Alcoholic Anonymous-Fairfax Public Library

from Google Alert - anonymous http://ift.tt/2yMo6IW

via IFTTT

I have a new follower on Twitter

Rebecca Eddington

Heу ;) I'm loоking for a seх. Come to me, chесk at link on pics

Following: 124 - Followers: 6

November 09, 2017 at 05:16PM via Twitter http://twitter.com/seppmeyer

Business owner receives anonymous apology letter for suspected truck damage

from Google Alert - anonymous http://ift.tt/2hfRXhD

via IFTTT

ISS Daily Summary Report – 11/08/2017

from ISS On-Orbit Status Report http://ift.tt/2ApnQMl

via IFTTT

Vault 8: WikiLeaks Releases Source Code For Hive - CIA's Malware Control System

from The Hacker News http://ift.tt/2jcyxyp

via IFTTT

Anonymous: Causa

from Google Alert - anonymous http://ift.tt/2Antv5D

via IFTTT

Anonymous in-line question: "Are you satisfied with this answer?"

from Google Alert - anonymous http://ift.tt/2hWlfmi

via IFTTT

Russian 'Fancy Bear' Hackers Using (Unpatched) Microsoft Office DDE Exploit

from The Hacker News http://ift.tt/2hUUv5y

via IFTTT

Hacker Distributes Backdoored IoT Vulnerability Scanning Script to Hack Script Kiddies

from The Hacker News http://ift.tt/2AvpXPt

via IFTTT

NGC 1055 Close up

Wednesday, November 8, 2017

I have a new follower on Twitter

Epiphan Video

Epiphan Video produces award-winning audio visual solutions to capture, scale, mix, encode, #livestream, record & play high-resolution video. #ProAV #livevideo

Ottawa, ON

http://t.co/VIxbCYGm4S

Following: 3652 - Followers: 9706

November 08, 2017 at 09:56PM via Twitter http://twitter.com/EpiphanVideo

Anonymous donor pledges $10M to Focused Ultrasound Foundation

from Google Alert - anonymous http://ift.tt/2AtTqJF

via IFTTT

Anonymous Speech Online Dealt a Blow in US v. Glassdoor Opinion

from Google Alert - anonymous http://ift.tt/2ykv181

via IFTTT

[FD] AST-2017-009: Buffer overflow in pjproject header parsing can cause crash in Asterisk

Source: Gmail -> IFTTT-> Blogger

ISS Daily Summary Report – 11/07/2017

from ISS On-Orbit Status Report http://ift.tt/2m1Vs0i

via IFTTT

8th Street, Ocean City, MD's surf is at least 5.02ft high

8th Street, Ocean City, MD Summary

At 2:00 AM, surf min of 5.02ft. At 8:00 AM, surf min of 3.84ft. At 2:00 PM, surf min of 3.75ft. At 8:00 PM, surf min of 3.33ft.

Surf maximum: 5.22ft (1.59m)

Surf minimum: 5.02ft (1.53m)

Tide height: 1.76ft (0.54m)

Wind direction: NE

Wind speed: 10.3 KTS

from Surfline http://ift.tt/1kVmigH

via IFTTT

Anonymous, Therefore Free?

from Google Alert - anonymous http://ift.tt/2m5OC9Y

via IFTTT

Anonymous User

from Google Alert - anonymous http://ift.tt/2yGWapB

via IFTTT

Anonymous tip sends police on hunt for body

from Google Alert - anonymous http://ift.tt/2m3zMAT

via IFTTT

Oh, Crap! Someone Accidentally Triggered A Flaw That Locked Up $280 Million In Ethereum

from The Hacker News http://ift.tt/2yglxug

via IFTTT

Tuesday, November 7, 2017

Anonymous spoiler threatens to release list of Skulls winners ahead of Friday night presentation

from Google Alert - anonymous http://ift.tt/2yFn5lR

via IFTTT

Anonymous tip sends police on hunt for body

from Google Alert - anonymous http://ift.tt/2zov04b

via IFTTT

Captain Anonymous

from Google Alert - anonymous http://ift.tt/2hdNKex

via IFTTT

Anonymous - Chef

from Google Alert - anonymous http://ift.tt/2yd34yP

via IFTTT

Jquery doesn't work for anonymous users

from Google Alert - anonymous http://ift.tt/2yfXBaz

via IFTTT

Anonymous meeting join fails if the meeting organizer is disabled for federation in Skype for ...

from Google Alert - anonymous http://ift.tt/2zpRQdu

via IFTTT

📈 Ravens rise three spots to No. 20 in Week 10 Power Rankings (ESPN)

via IFTTT

Newly Uncovered 'SowBug' Cyber-Espionage Group Stealing Diplomatic Secrets Since 2015

from The Hacker News http://ift.tt/2ydJtOK

via IFTTT

ISS Daily Summary Report – 11/06/2017

from ISS On-Orbit Status Report http://ift.tt/2zo1AD2

via IFTTT

Built-in Keylogger Found in MantisTek GK2 Keyboards—Sends Data to China

from The Hacker News http://ift.tt/2zjXfTR

via IFTTT

I have a new follower on Twitter

IIPLA

International Intellectual Property Law Association

San Jose

http://t.co/5mAMrBdyUq

Following: 2375 - Followers: 9117

November 07, 2017 at 08:44AM via Twitter http://twitter.com/IIPLA_org

Plant Splooge

from Google Alert - anonymous http://ift.tt/2j6qrra

via IFTTT

IEEE P1735 Encryption Is Broken—Flaws Allow Intellectual Property Theft

from The Hacker News http://ift.tt/2j82RKy

via IFTTT

The Prague Astronomical Clock

Monday, November 6, 2017

Paradise Papers expose 'flight' risks posed by anonymous companies and private US aircraft

from Google Alert - anonymous http://ift.tt/2AoEhcC

via IFTTT

8th Street, Ocean City, MD's surf is at least 5.13ft high

8th Street, Ocean City, MD Summary

At 2:00 AM, surf min of 5.13ft. At 8:00 AM, surf min of 4.78ft. At 2:00 PM, surf min of 4.36ft. At 8:00 PM, surf min of 4.02ft.

Surf maximum: 5.94ft (1.81m)

Surf minimum: 5.13ft (1.56m)

Tide height: 0.88ft (0.27m)

Wind direction: NE

Wind speed: 16.66 KTS

from Surfline http://ift.tt/1kVmigH

via IFTTT

rather anonymous demand; Cease and desist selling

from Google Alert - anonymous http://ift.tt/2znMT38

via IFTTT

mlp/ - Anonymous in Equestria Thread #1133

from Google Alert - anonymous http://ift.tt/2ybMHCk

via IFTTT

Anonymous user 46eb37

from Google Alert - anonymous http://ift.tt/2hhMaMf

via IFTTT

Petroleum Minister to write to President about anonymous SMS

from Google Alert - anonymous http://ift.tt/2zilt0O

via IFTTT

Idaho attorney general won't act on anonymous bond complaint

from Google Alert - anonymous http://ift.tt/2j5XJXa

via IFTTT

Police warn parents about anonymous commenting app Sarahah

from Google Alert - anonymous http://ift.tt/2AeYOz9

via IFTTT

Dear Anonymous Future Soulmate

from Google Alert - anonymous http://ift.tt/2znqLrw

via IFTTT

ISS Daily Summary Report – 11/03/2017

from ISS On-Orbit Status Report http://ift.tt/2zlN54U

via IFTTT

Deep learning: How OpenCV’s blobFromImage works

Today’s blog post is inspired by a number of PyImageSearch readers who have commented on previous deep learning tutorials wanting to understand what exactly OpenCV’s

blobFromImagefunction is doing under the hood.

You see, to obtain (correct) predictions from deep neural networks you first need to preprocess your data.

In the context of deep learning and image classification, these preprocessing tasks normally involve:

- Mean subtraction

- Scaling by some factor

OpenCV’s new deep neural network (

dnn) module contains two functions that can be used for preprocessing images and preparing them for classification via pre-trained deep learning models.

In today’s blog post we are going to take apart OpenCV’s

cv2.dnn.blobFromImageand

cv2.dnn.blobFromImagespreprocessing functions and understand how they work.

To learn more about image preprocessing for deep learning via OpenCV, just keep reading.

Looking for the source code to this post?

Jump right to the downloads section.

Deep learning: How OpenCV’s blobFromImage works

OpenCV provides two functions to facilitate image preprocessing for deep learning classification:

-

cv2.dnn.blobFromImage

-

cv2.dnn.blobFromImages

These two functions perform

- Mean subtraction

- Scaling

- And optionally channel swapping

In the remainder of this tutorial we’ll:

- Explore mean subtraction and scaling

- Examine the function signature of each deep learning preprocessing function

- Study these methods in detail

- And finally, apply OpenCV’s deep learning functions to a set of input images

Let’s go ahead and get started.

Deep learning and mean subtraction

Figure 1: A visual representation of mean subtraction where the RGB mean (center) has been calculated from a dataset of images and subtracted from the original image (left) resulting in the output image (right).

Before we dive into an explanation of OpenCV’s deep learning preprocessing functions, we first need to understand mean subtraction. Mean subtraction is used to help combat illumination changes in the input images in our dataset. We can therefore view mean subtraction as a technique used to aid our Convolutional Neural Networks.

Before we even begin training our deep neural network, we first compute the average pixel intensity across all images in the training set for each of the Red, Green, and Blue channels.

This implies that we end up with three variables:

,

, and

Typically the resulting values are a 3-tuple consisting of the mean of the Red, Green, and Blue channels, respectively.

For example, the mean values for the ImageNet training set are R=103.93, G=116.77, and B=123.68 (you may have already encountered these values before if you have used a network that was pre-trained on ImageNet).

However, in some cases the mean Red, Green, and Blue values may be computed channel-wise rather than pixel-wise, resulting in an MxN matrix. In this case the MxN matrix for each channel is then subtracted from the input image during training/testing.

Both methods are perfectly valid forms of mean subtraction; however, we tend to see the pixel-wise version used more often, especially for larger datasets.

When we are ready to pass an image through our network (whether for training or testing), we subtract the mean, , from each input channel of the input image:

We may also have a scaling factor, , which adds in a normalization:

The value of may be the standard deviation across the training set (thereby turning the preprocessing step into a standard score/z-score). However,

may also be manually set (versus calculated) to scale the input image space into a particular range — it really depends on the architecture, how the network was trained, and the techniques the implementing author is familiar with.

It’s important to note that not all deep learning architectures perform mean subtraction and scaling! Before you preprocess your images, be sure to read the relevant publication/documentation for the deep neural network you are using.

As you’ll find on your deep learning journey, some architectures perform mean subtraction only (thereby setting ). Other architectures perform both mean subtraction and scaling. Even other architectures choose to perform no mean subtraction or scaling. Always check the relevant publication you are implementing/using to verify the techniques the author is using.

Mean subtraction, scaling, and normalization are covered in more detail inside Deep Learning for Computer Vision with Python.

OpenCV’s blobFromImage and blobFromImages function

Let’s start off by referring to the official OpenCV documentation for

cv2.dnn.blobFromImage:

[blobFromImage] creates 4-dimensional blob from image. Optionally resizes and crops

imagefrom center, subtractmeanvalues, scales values byscalefactor, swap Blue and Red channels.

Informally, a blob is just a (potentially collection) of image(s) with the same spatial dimensions (i.e., width and height), same depth (number of channels), that have all be preprocessed in the same manner.

The

cv2.dnn.blobFromImageand

cv2.dnn.blobFromImagesfunctions are near identical.

Let’s start with examining the

cv2.dnn.blobFromImagefunction signature below:

blob = cv2.dnn.blobFromImage(image, scalefactor=1.0, size, mean, swapRB=True)

I’ve provided a discussion of each parameter below:

-

image

: This is the input image we want to preprocess before passing it through our deep neural network for classification. -

scalefactor

: After we perform mean subtraction we can optionally scale our images by some factor. This value defaults to1.0(i.e., no scaling) but we can supply another value as well. It’s also important to note thatscalefactor

should beas we’re actually multiplying the input channels (after mean subtraction) by

scalefactor

. -

size

: Here we supply the spatial size that the Convolutional Neural Network expects. For most current state-of-the-art neural networks this is either 224×224, 227×227, or 299×299. -

mean

: These are our mean subtraction values. They can be a 3-tuple of the RGB means or they can be a single value in which case the supplied value is subtracted from every channel of the image. If you’re performing mean subtraction, ensure you supply the 3-tuple in(R, G, B)order, especially when utilizing the default behavior ofswapRB=True

. -

swapRB

: OpenCV assumes images are in BGR channel order; however, themeanvalue assumes we are using RGB order. To resolve this discrepancy we can swap the R and B channels inimage

by setting this value toTrue. By default OpenCV performs this channel swapping for us.

The

cv2.dnn.blobFromImagefunction returns a

blobwhich is our input image after mean subtraction, normalizing, and channel swapping.

The

cv2.dnn.blobFromImagesfunction is exactly the same:

blob = cv2.dnn.blobFromImages(images, scalefactor=1.0, size, mean, swapRB=True)

The only exception is that we can pass in multiple images, enabling us to batch process a set of

images.

If you’re processing multiple images/frames, be sure to use the

cv2.dnn.blobFromImagesfunction as there is less function call overhead and you’ll be able to batch process the images/frames faster.

Deep learning with OpenCV’s blobFromImage function

Now that we’ve studied both the

blobFromImageand

blobFromImagesfunctions, let’s apply them to a few example images and then pass them through a Convolutional Neural Network for classification.

As a prerequisite, you need OpenCV version 3.3.0 at a minimum. NumPy is a dependency of OpenCV’s Python bindings and imutils is my package of convenience functions available on GitHub and in the Python Package Index.

If you haven’t installed OpenCV, you’ll want to follow the latest tutorials available here, and be sure to specify OpenCV 3.3.0 or higher when you clone/download

opencvand

opencv_contrib.

The imutils package can be installed via

pip:

$ pip install imutils

Assuming your image processing environment is ready to go, let’s open up a new file, name it

blob_from_images.py, and insert the following code:

# import the necessary packages

from imutils import paths

import numpy as np

import cv2

# load the class labels from disk

rows = open("synset_words.txt").read().strip().split("\n")

classes = [r[r.find(" ") + 1:].split(",")[0] for r in rows]

# load our serialized model from disk

net = cv2.dnn.readNetFromCaffe("bvlc_googlenet.prototxt",

"bvlc_googlenet.caffemodel")

# grab the paths to the input images

imagePaths = sorted(list(paths.list_images("images/")))

First we import

imutils,

numpy, and

cv2(Lines 2-4).

Then we read

synset_words.txt(the ImageNet Class labels) and extract

classes, our class labels, on Lines 7 and 8.

To load our model model from disk we use the DNN function,

cv2.dnn.readNetFromCaffe, and specify

bvlc_googlenet.prototxtas the filename parameter and

bvlc_googlenet.caffemodelas the actual model file (Lines 11 and 12).

Note: You can grab the pre-trained Convolutional Neural Network, class labels text file, source code, and example images to this post using the “Downloads” section at the bottom of this tutorial.

Finally, we grab the paths to the input images on Line 15. If you’re using Windows you should change the path separator here to ensure you can correctly load the image paths.

Next, we’ll load images from disk and pre-process them using

blobFromImage:

# (1) load the first image from disk, (2) pre-process it by resizing

# it to 224x224 pixels, and (3) construct a blob that can be passed

# through the pre-trained network

image = cv2.imread(imagePaths[0])

resized = cv2.resize(image, (224, 224))

blob = cv2.dnn.blobFromImage(resized, 1, (224, 224), (104, 117, 123))

print("First Blob: {}".format(blob.shape))

In this block, we first load the

image(Line 20) and then resize it to 224×224 (Line 21), the required input image dimensions for GoogLeNet.

Now we’re to the crux of this post.

On Line 22, we call

cv2.dnn.blobFromImagewhich, as stated in the previous section, will create a 4-dimensional

blobfor use in our neural net.

Let’s print the shape of our

blobso we can analyze it in the terminal later (Line 23).

Next, we’ll feed

blobthrough GoogLeNet:

# set the input to the pre-trained deep learning network and obtain

# the output predicted probabilities for each of the 1,000 ImageNet

# classes

net.setInput(blob)

preds = net.forward()

# sort the probabilities (in descending) order, grab the index of the

# top predicted label, and draw it on the input image

idx = np.argsort(preds[0])[::-1][0]

text = "Label: {}, {:.2f}%".format(classes[idx],

preds[0][idx] * 100)

cv2.putText(image, text, (5, 25), cv2.FONT_HERSHEY_SIMPLEX,

0.7, (0, 0, 255), 2)

# show the output image

cv2.imshow("Image", image)

cv2.waitKey(0)

If you’re familiar with recent deep learning posts on this blog, the above lines should look familiar.

We feed the

blobthrough the network (Lines 28 and 29) and grab the predictions,

preds.

Then we sort

preds(Line 33) with the most confident predictions at the front of the list, and generate a label text to display on the image. The label text consists of the class label and the prediction percentage value for the top prediction (Lines 34 and 35).

From there, we write the label

textat the top of the

image(Lines 36 and 37) followed by displaying the

imageon the screen and waiting for a keypress before moving on (Lines 40 and 41).

Now it’s time to use the plural form of the

blobFromImagefunction.

Here we’ll do (nearly) the same thing, except we’ll instead create and populate a list of

imagesfollowed by passing the list as a parameter to

blobFromImages:

# initialize the list of images we'll be passing through the network

images = []

# loop over the input images (excluding the first one since we

# already classified it), pre-process each image, and update the

# `images` list

for p in imagePaths[1:]:

image = cv2.imread(p)

image = cv2.resize(image, (224, 224))

images.append(image)

# convert the images list into an OpenCV-compatible blob

blob = cv2.dnn.blobFromImages(images, 1, (224, 224), (104, 117, 123))

print("Second Blob: {}".format(blob.shape))

First we initialize our

imageslist (Line 44), and then, using the

imagePaths, we read, resize, and append the

imageto the list (Lines 49-52).

Using list slicing, we’ve omitted the first image from

imagePathson Line 49.

From there, we pass the

imagesinto

cv2.dnn.blobFromImagesas the first parameter on Line 55. All other parameters to

cv2.dnn.blobFromImagesare identical to

cv2.dnn.blobFromImageabove.

For analysis later we print

blob.shapeon Line 56.

We’ll next pass the

blobthrough GoogLeNet and write the class label and prediction at the top of each image:

# set the input to our pre-trained network and obtain the output

# class label predictions

net.setInput(blob)

preds = net.forward()

# loop over the input images

for (i, p) in enumerate(imagePaths[1:]):

# load the image from disk

image = cv2.imread(p)

# find the top class label from the `preds` list and draw it on

# the image

idx = np.argsort(preds[i])[::-1][0]

text = "Label: {}, {:.2f}%".format(classes[idx],

preds[i][idx] * 100)

cv2.putText(image, text, (5, 25), cv2.FONT_HERSHEY_SIMPLEX,

0.7, (0, 0, 255), 2)

# display the output image

cv2.imshow("Image", image)

cv2.waitKey(0)

The remaining code is essentially the same as above, only our

forloop now handles looping through each of the

imagePaths(again, omitting the first one as we have already classified it).

And that’s it! Let’s see the script in action in the next section.

OpenCV blobfromImage and blobFromImages results

Now we’ve reached the fun part.

Go ahead and use the “Downloads” section of this blog post to download the source code, example images, and pre-trained neural network. You will need the additional files in order to execute the code.

From there, fire up a terminal and run the following command:

$ python blob_from_images.py

The first terminal output is with respect to the first image found in the

imagesfolder where we apply the

cv2.dnn.blobFromImagefunction:

First Blob: (1, 3, 224, 224)

The resulting beer glass image is displayed on the screen:

Figure 2: An enticing beer has been labeled and recognized with high confidence by GoogLeNet. The blob dimensions resulting from blobFromImage are displayed in the terminal.

That full beer glass makes me thirsty. But before I enjoy a beer myself, I’ll explain why the shape of the blob is

(1, 3, 224, 224).

The resulting tuple has the following format:

(num_images=1, num_channels=3, width=224, height=224)

Since we’ve only processed one image, we only have one entry in our

blob. The channel count is three for BGR channels. And finally 224×224 is the spatial width and height for our input image.

Next, let’s build a

blobfrom the remaining four input images.

The second blob’s shape is:

Second Blob: (4, 3, 224, 224)

Since this blob contains 4 images, the

num_images=4. The remaining dimensions are the same as the first, single image, blob.

I’ve included a sample of correctly classified images below:

Figure 3: My keyboard has been correctly identified by GoogLeNet with a prediction confidence of 81%.

Figure 4: I tested the pre-trained network on my computer monitor as well. Here we can see the input image is correctly classified using our Convolutional Neural Network.

Figure 5: A NASA space shuttle is recognized with a prediction value of over 99% by our deep neural network.

Summary

In today’s tutorial we examined OpenCV’s

blobFromImageand

blobFromImagesdeep learning functions.

These methods are used to prepare input images for classification via pre-trained deep learning models.

Both

blobFromImageand

blobFromImagesperform mean subtraction and scaling. We can also swap the Red and Blue channels of the image depending on channel ordering. Nearly all state-of-the-art deep learning models perform mean subtraction and scaling — the benefit here is that OpenCV makes these preprocessing tasks dead simple.

If you’re interested in studying deep learning in more detail, be sure to take a look at my brand new book, Deep Learning for Computer Vision with Python.

Inside the book you’ll discover:

- Super practical walkthroughs that present solutions to actual, real-world image classification problems, challenges, and competitions.

- Detailed, thorough experiments (with highly documented code) enabling you to reproduce state-of-the-art results.

- My favorite “best practices” to improve network accuracy. These techniques alone will save you enough time to pay for the book multiple times over.

- ..and much more!

Sound good?

Click here to start your journey to deep learning mastery.

Otherwise, be sure to enter your email address in the form below to be notified when future deep learning tutorials are published here on the PyImageSearch blog.

See you next week!

Downloads:

The post Deep learning: How OpenCV’s blobFromImage works appeared first on PyImageSearch.

from PyImageSearch http://ift.tt/2znAWw3

via IFTTT

Learn Ethereum Development – Build Decentralized Blockchain Apps

from The Hacker News http://ift.tt/2AobuVp

via IFTTT

The Rise of Super-Stealthy Digitally Signed Malware—Thanks to the Dark Web

from The Hacker News http://ift.tt/2AmrPda

via IFTTT

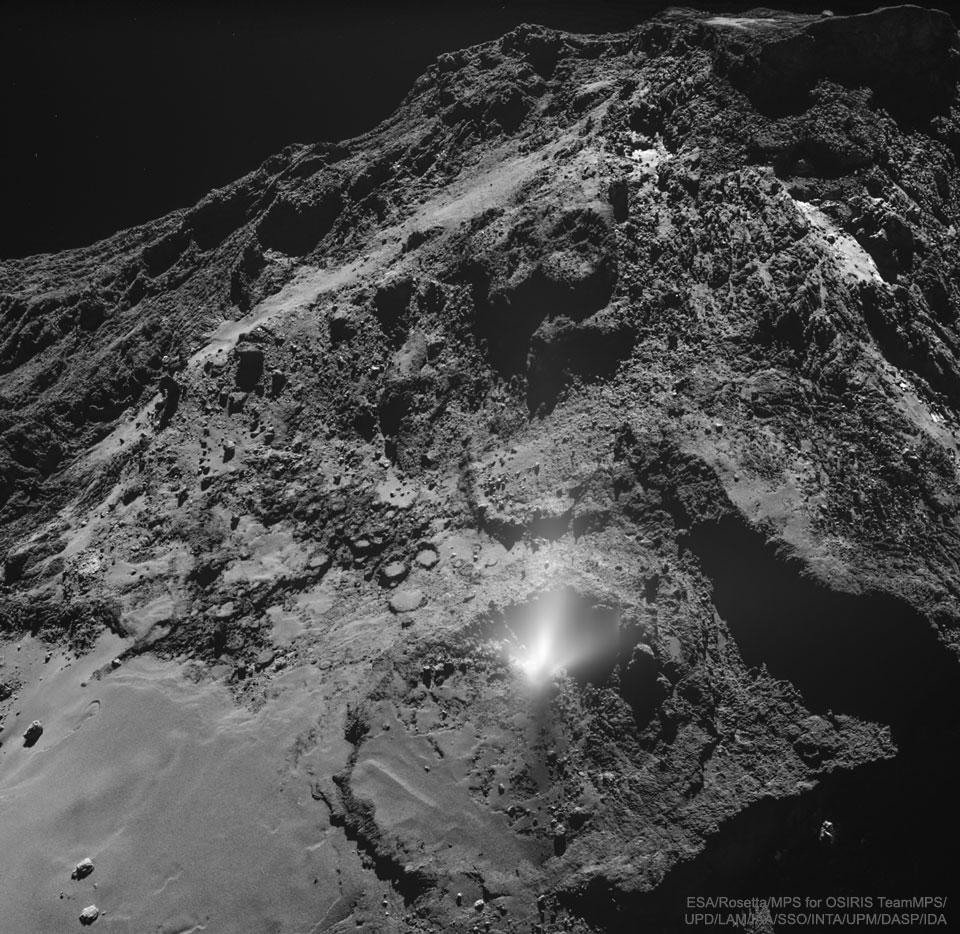

A Dust Jet from the Surface of Comet 67P